Understanding the Black Box - Part 2

Agents are opaque and we are embedding them into every digital interaction that we have

Before we jump right in the next steps a quick recap of Part 1

Since their rise in 2022, LLMs built on the transformer architecture such as ChatGPT, Gemini, and Claude have revolutionized how humans interact with AI, software and computers. By 2025, their influence has expanded into image and video generation with systems like OpenAI’s Sora, Meta’s Vibes, and xAI’s Grok. Yet, despite their transformative capabilities, the mechanisms driving their intelligence remain largely mysterious. Unlike traditional software, which follows explicit, human-written instructions, LLMs learn from vast amounts of text data. Through this training process, they develop a dense network of trillions of parameters capable of encoding knowledge, reasoning, and creativity, but with little interpretability. This opacity has given rise to the field of mechanistic interpretability, which aims to uncover how these systems actually work.

The first article in this series introduced how transformers process information during training. It explained how text is first tokenized into numerical representations, how those tokens are transformed into embedding vectors that capture meaning, and how information flows through the residual stream, a shared workspace where each transformer layer refines understanding. Together, these steps form the foundation for how transformers represent meaning and context.

Step 4: How attention heads let transformers use context and move information between tokens

The embedding matrix gives each word its standalone meaning, but understanding language requires more than that. The real breakthrough in transformers is the attention mechanism, which enables models to connect words across a sentence and interpret them in context.

Take the word “bank”. It means something entirely different in I swam near the river bank versus I got cash from the bank. Attention allows the model to figure out which meaning fits by relating words to one another.

An attention layer contains multiple attention heads that operate in parallel, each focusing on different relationships between tokens. Every head has two core components:

QK (Query–Key) circuit: Decides where to look for relevant information. For each token being processed (the query), it scores how related it is to every previous token (the keys). These scores turn into probabilities, effectively telling the model how much attention to give to each earlier token.

OV (Output–Value) circuit: Determines what information to bring over. Each source token (key) produces a value vector. The destination token (query) then receives a weighted average of these values, with weights coming from the attention pattern learned by the QK circuit. This new information is added back into the residual stream at that token’s position.

When a token gives another a high attention score, it’s like saying, “That’s the information I need.”

Importantly, a query token can only attend to tokens that came before it, never to future ones.

Intuition: Think of each query as asking a question about all earlier words, and the keys and values as providing the answers.

A key mechanism: Induction heads

One particularly interesting type of attention head is the induction head, which powers what’s known as in-context learning, a model’s ability to pick up patterns or rules directly from examples in the prompt.

An induction head follows a simple algorithm:

If token A was followed by token B earlier in the text, then the next time A appears, predict that B will follow again.

This allows the model to generalize patterns it has never explicitly seen during training.

In practice, the induction circuit involves two heads:

The previous-token head in the first layer copies information from one token to the next (for example, copying from sat to on).

The induction head in the second layer looks back to find where the current token appeared before, attends to the token that followed it (on in this case), and boosts the probability of generating that token next.

This behaviour shows that transformers can learn algorithms, not just memorize data, and since induction heads only appear in models with at least two layers, they’re evidence that deeper models develop qualitatively new reasoning abilities.

In attention visualisations, induction heads appear as off-center diagonal patterns, showing how tokens in repeated phrases attend to the next token in their earlier counterparts.

Understanding attention through indirect object identification (IOI)

Another fascinating example of how attention works comes from a task called indirect object identification (IOI), for instance:

When Mary and John went to the store, John gave a drink to...

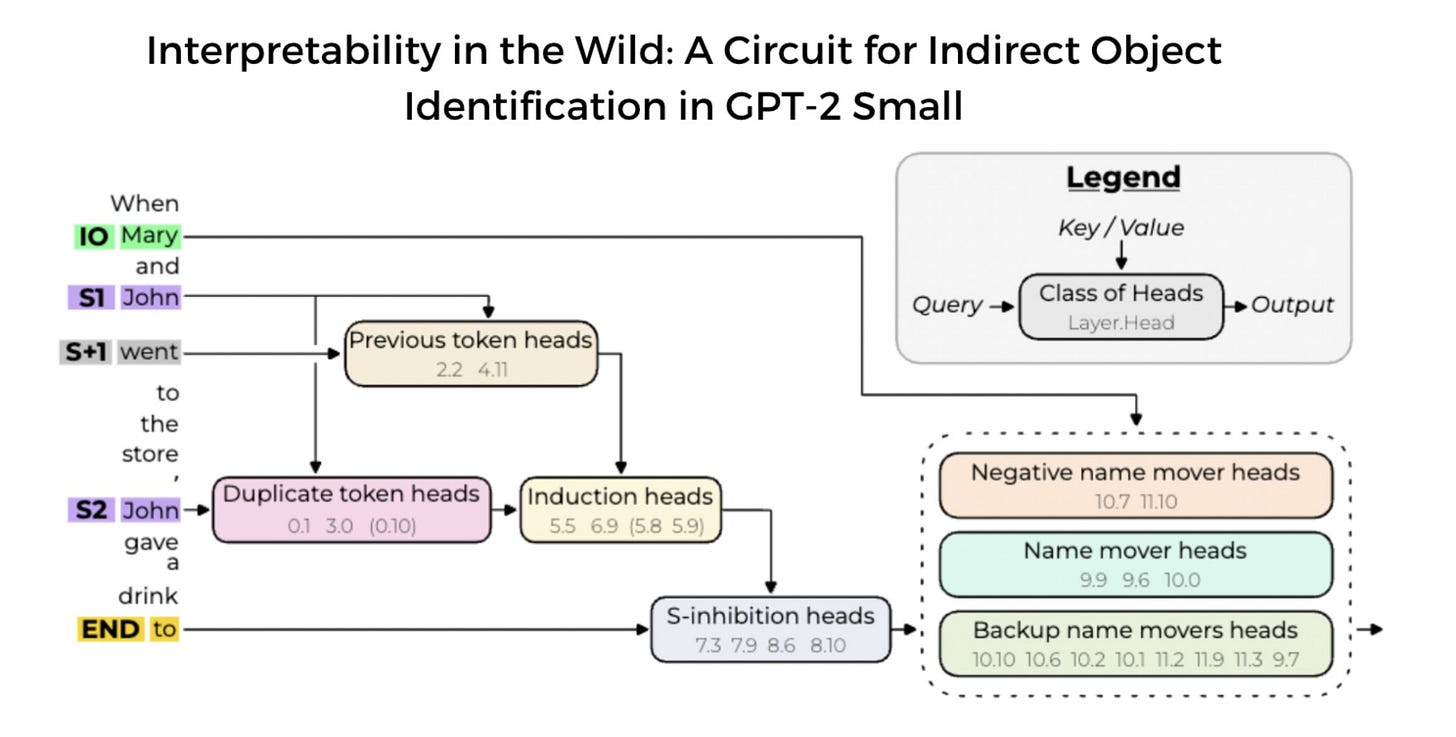

The correct answer is Mary. In 2022, Redwood Research reverse-engineered how transformers solve this using a network of specialized attention heads arranged in a three-step circuit:

Identify all names in the sentence (Mary, John, John).

Filter out duplicates (John).

Output the remaining name (Mary).

These steps are carried out by three main groups of heads:

Duplicate Token Heads: Detect repeated names and connect the later one to its earlier instance.

S-Inhibition Heads: Suppress duplicate tokens, preventing them from influencing the model’s next prediction.

Name Mover Heads: Copy the correct (non-duplicated) name to the final position, ensuring the model predicts Mary.

This IOI circuit highlights how complex reasoning can emerge from the coordination of many attention heads, each performing a small, specialized role within the larger mechanism of understanding.

Source

Indirect Object Identification in GPT-2: https://arxiv.org/abs/2211.00593

Neel Nanda: A Walkthrough of Interpretability in the Wild (w/ authors Kevin Wang, Arthur Conmy & Alexandre Variengien)

https://www.alignmentforum.org/posts/3ecs6duLmTfyra3Gp/some-lessons-learned-from-studying-indirect-object

https://transformer-circuits.pub/2021/framework/index.html

https://aignishant.medium.com/unraveling-the-magic-of-q-k-and-v-in-the-attention-mechanism-with-formulas-035cb0781905

https://transformer-circuits.pub/2022/in-context-learning-and-induction-heads/index.html

https://medium.com/data-science/what-are-query-key-and-value-in-the-transformer-architecture-and-why-are-they-used-acbe73f731f2

https://www.lesswrong.com/posts/XGHf7EY3CK4KorBpw/understanding-llms-insights-from-mechanistic